So far this week, we’ve covered the explosion of new AI models and the hands-on, often messy, reality of building with them. Today, we’re bridging the gap between a personal project and the complex world of the enterprise.

So far this week, we’ve covered the explosion of new AI models and the hands-on, often messy, reality of building with them. Today, we’re bridging the gap between a personal project and the complex world of the enterprise.

How do we take these incredibly powerful, general-purpose AIs and make them genuinely useful for a specific business? How do we stop them from just making stuff up?

The Billion-Dollar Brain with Amnesia: AI’s Knowledge Problem

The problem with even the most advanced LLMs is that they are, in essence, brilliant minds with amnesia. They’ve been trained on a vast swathe of the public internet, but they know absolutely nothing about your company’s private data. They operate with a “knowledge cutoff” date, meaning they are oblivious to recent events. This leads to “hallucinations,” where the model confidently invents facts because it doesn’t have the correct information.

I saw the flip side of this recently. I had a meeting with two financial advisors from my bank, and I was thoroughly unimpressed. Before the call, I spent less than an hour feeding my personal financial details and the relevant UK tax regulations into two different LLMs. The models came back with several efficient, legitimate strategies to improve my financial situation—strategies my accountant later confirmed were spot on. The AI, armed with specific, local knowledge, outperformed the human “experts.”

This is the core challenge for enterprises: how do we safely give these powerful AI brains access to our internal, proprietary knowledge?

RAG for the Rest of Us: Giving Your AI a Library Card

The answer is a technique called Retrieval-Augmented Generation, or RAG.

Don’t let the fancy name intimidate you. Think of it this way: instead of asking the AI to answer a question from its own, sometimes questionable, memory, you give it a library card to your company’s private library.

When a user asks a question, the RAG process works like this :

- Retrieve: The system first searches your company’s internal documents to find the most relevant information.

- Augment: It then takes this relevant information and “augments” the user’s original prompt, essentially saying, “Here’s the user’s question, and here are some relevant excerpts from our internal knowledge base.”

- Generate: Finally, it sends this augmented prompt to the LLM, which uses the provided context to generate a factually grounded, accurate answer.

This transforms the LLM from a general-purpose know-it-all into a domain-specific expert.

The Visual Path: Building an Enterprise RAG Bot with n8n (No Code Required!)

So how do you actually build this? For enterprise architects or anyone who needs to prototype quickly, the low-code path is a game-changer. My tool of choice here is n8n.

n8n is a workflow automation tool that allows you to build complex processes using a visual, node-based canvas. It’s like super-glue for the modern enterprise. For AI, it has deep integrations with frameworks like LangChain, making it incredibly powerful for building RAG systems without writing a single line of code.

In n8n, a RAG workflow is a series of connected nodes. You can visually construct a pipeline that triggers when a new file is added to Google Drive, breaks it into chunks, generates embeddings, and saves them in a vector store like Pinecone. An AI Agent node then uses this store as a “tool” to answer questions. You can build and deploy a sophisticated RAG chatbot in a matter of hours, not weeks.

The Code-First Path: Architecting Production RAG with LangChain

For developers building production-grade applications, the pro-code path offers maximum power. The undisputed king in this space is LangChain.

LangChain is an open-source framework that provides the essential building blocks for creating sophisticated AI applications. While n8n helps you visually connect the dots, LangChain gives you the code-level components to build robust, scalable systems from the ground up.

Its key strengths are:

- Modularity and Composability: LangChain provides standard interfaces for models and data sources, allowing you to easily swap components without rewriting your entire application. This prevents vendor lock-in.

- A Rich Ecosystem: It has a massive library of integrations with hundreds of model providers, databases, and APIs.

- Agentic Frameworks: With tools like LangGraph, LangChain provides a clear path from simple RAG chains to building complex AI agents—which sets us up perfectly for Friday’s discussion.

For an enterprise, LangChain is the toolkit for when you need full control over your AI’s logic, performance, and security in a production environment.

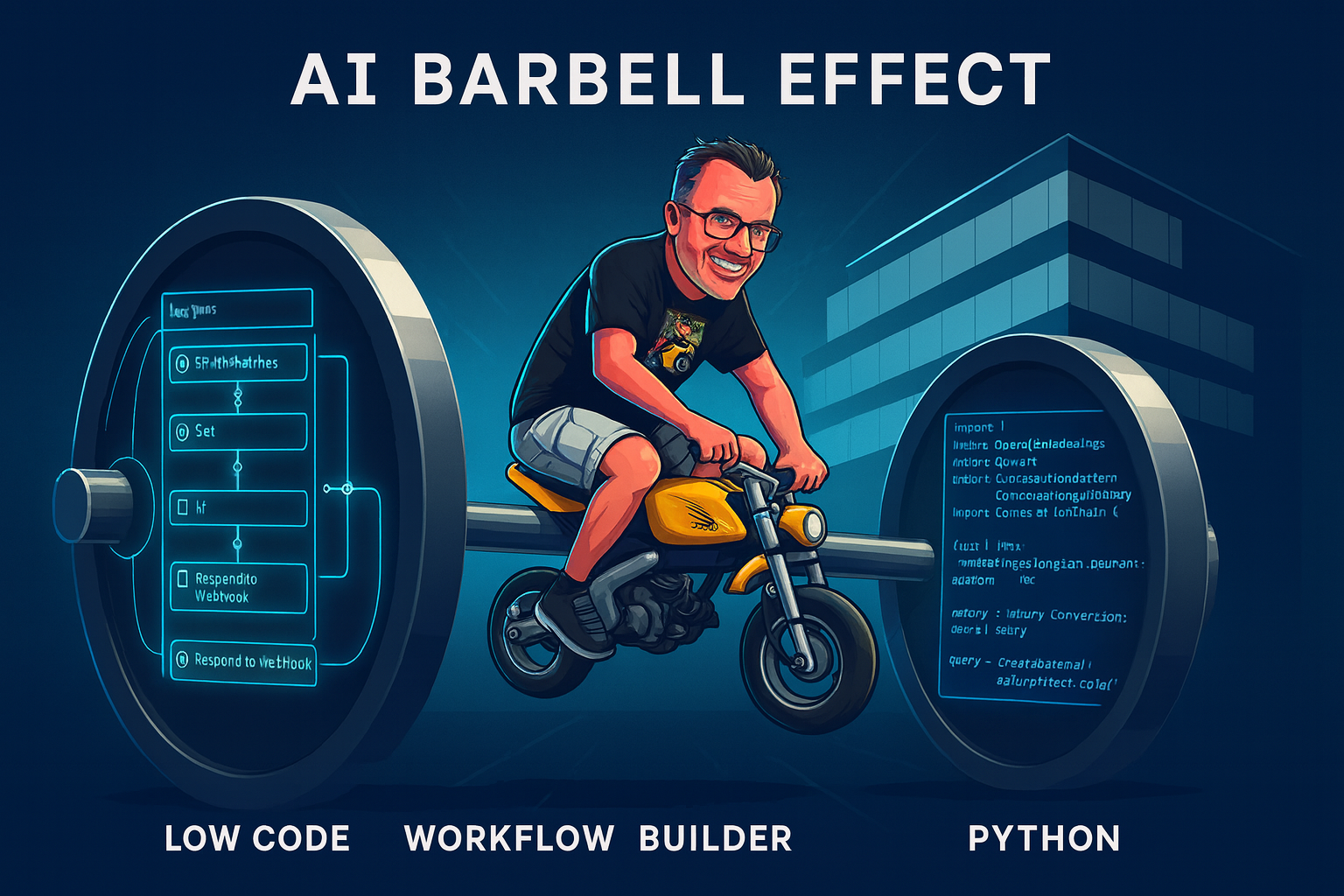

The AI “Barbell” Effect: Choosing Your Weapon

The emergence of these two paths—powerful low-code platforms like n8n and deep pro-code frameworks like LangChain—is creating what I call the “AI Barbell Effect.”

On one end, you have a high demand for accessible, visual tools that empower business users to build and automate quickly. On the other end, you have a high demand for deeply technical engineers who can use frameworks like LangChain to build the complex, scalable core AI systems. The space in the middle—the “just okay at coding” role—is getting squeezed. The market is rewarding both the rapid integrator and the deep architect.

To help you decide, here’s a quick comparison:

| Criterion | n8n (Low-Code) | LangChain (Pro-Code) |

| Ease of Use | Very High (Visual, node-based) | Low (Requires Python/JS skills) |

| Speed of Development | Extremely Fast (Hours/Days) | Moderate (Days/Weeks) |

| Flexibility/Customisation | Good (with limitations) | Extremely High (Full control) |

| Production Control | Good for defined workflows | Excellent for complex systems |

| Target User | Enterprise Architect, Business Analyst | Software Engineer, AI/ML Engineer |

Ultimately, both are essential tools. The key is knowing which one to pick for the job at hand.

Leave a comment